Introduction to Language understanding intelligent service (LUIS)

LUIS is the offering from Microsoft for adding natural language processing support to your applications. It is based on machine learning and can be used to add language understanding support to any kind of application e.g. desktop, web, bot etc.

In this post, I’ll explain the basic of working with LUIS and how to build a simple model in LUIS.

What does LUIS do?

Any language understanding framework is used to derive structure out of spoken or written sentences. For example, I can greet a person in multiple ways…

Hi

Hello Jack

Yo

What’s up Jack

and many more.

Although all the utterances look different there is one thing common in all the sentences and that is “Intention”.All these sentences are intended to greet someone. Also in two of the sentences, you are greeting a person named “Jack”. And this is what a language understanding framework does for you. For all the sentences fed to the LUIS, it can tell you what is the intent, what are the entities involved and what are the values for those entities.

Let’s take the utterances ‘What’s up Jack’ and ‘Hello Jack’. LUIS, in both these cases, would return you…

Intent —> GreetingEntity —> Person [Value —> Jack]

To make it clearer let’s take something complex like ‘Book me a flight from Bangalore to Chicago on 17, Oct”. In this case, LUIS would return…

Intent –> FlightBookingEntity —> City [Values —> Bangalore, Chicago]Entity —> Date[Value —> 17 Oct,2018]

By providing your app this kind of breakup of the sentence now your application can process and respond to this sentence in a structured way.

How does LUIS work?

To integrate your application with LUIS you need to create a LUIS model. A basic LUIS model consists of

- Intent

- Utterances

- Entities

Let me take an example and show you how to create a basic LUIS model. Let’s take the case of a Taxi booking app. This kind of app would have many scenarios .we will pick 2 of them viz. Greeting and ordering a taxi and see how to build those scenarios in LUIS.

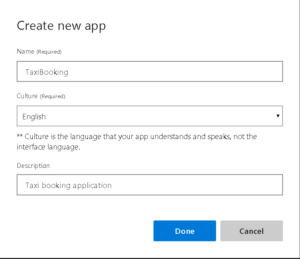

Step 1: Create a LUIS App.

Just go to www.luis.ai, sign in and create a new LUIS app.

Step 2: Create intents

This is the step where you create the intents which would be understood by your application domain. E.g in our case we would have intents like

- Greeting: For handling greetings from your users like hi hello etc.

- TaxiBooking.TaxiRequest: When your users would like to book a taxi by specifying the destination.

- TaxiBooking.TrackTaxi: When users would like to track the taxi which they booked.

- TaxiBooking.CancelRequest: For canceling the taxi.

There could be many other intents which your application would like to understand based on how complex your app is.

For the purpose of this post, I would model intent 1 & 2.

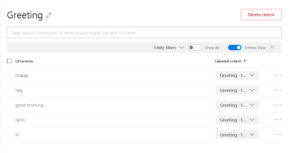

To start with intent go to the intent section of your app (on the left side) and create new intent called Greeting.

In this intent, you specify at least 5 utterances which would showcase the use of this intent.

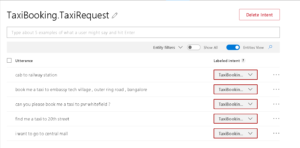

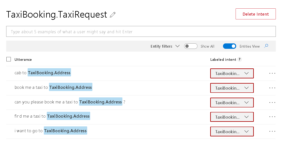

Similarly, create a new intent for Taxi booking called TaxiBooking.TaxiRequest and add utterances for the same.

The utterances which you provided are the first step in training your model and making LUIS understand what the user input means.

For making a production app you would like to train your model much more than this by providing more utterances representing different ways a user can interact with your app.

Just to be clear providing utterances like this does not mean that you need to provide all the utterances and anything outside of this set won’t work. Based on provided utterances LUIS can identify other similar utterances by itself. I’ll show you an example of this later.

Step 3: Create and tag entities

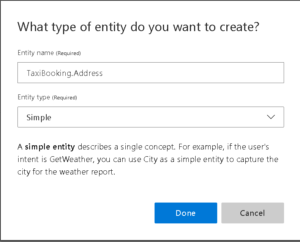

Next step is to create entities and identify entities in the utterances you provided. For our case, we would create one entity which represents the destination address where you want to go.

To create an entity go to the left side menu and create a new intent viz. TaxiBooking.Address.

Next go back to the intent viz. TaxiBooking.RequestTaxi and tag the address part of the sentence with TaxiBooking.Address entity. This would help LUIS to understand how and where in the sentence address can occur.

NOTE: If you want to tag multiple words into single entity e.g. “25th avenue street” as Address you would need to click on the first word (i.e. 25th ) of the entity and then the last word (i.e. street) and then you would be able to tag multiple words.

Step 4: Train, test and publish the model.

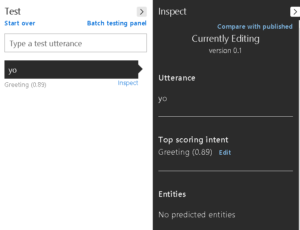

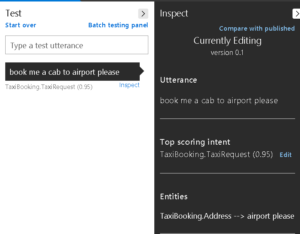

The final step would be training the model by simply clicking on the ‘Train’ button on the top right corner. Once the model is trained you can use the inbuilt test console to test your model. Below are a few screenshots I took after testing the above model.

Greeting intent :

TaxiBooking.TaxiRequest intent :

As you can see I gave some inputs and LUIS is able to match them. It also shows the %ge match e.g in case of greeting there is 89% match (which btw is very good) and also in case of the 2nd example, it matched the intent with 92% accuracy and also extracted the entity and value (i.e. KNK courtyard) from the sentence.

The most important thing here is that the inputs which I provided are not an exact replica of my example utterances (e.g. I never provided ‘Yo’ in my greeting utterances ) but still LUIS was able to match them and this is the whole point of using a system like LUIS.

Once you are satisfied with your changes you can ‘Publish’ your model and it would be available publicly for use in your apps.

Is it all that perfect !!!

Well at this point you might be thinking that this is great and so simple but let me show you another example and bring you back to the reality that nothing is perfect:-).

Notice in above example the entity value LUIS extracted is ‘airport please’ which is wrong.

Why I wanted to show you this example is to emphasize on the need of training your model by providing lots of different kind of utterances which can help LUIS understand where all e.g. address can occur in your sentence and in what kind of context.

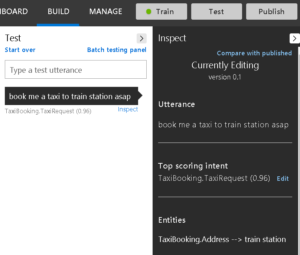

To resolve the above issue I just provided one more utterance to the LUIS where the address does not come at the last and again provided a similar but different input as shown below. This time LUIS could extract the address correctly.

Hope this post gives you a good understanding of how an NLP engine like LUIS works. Also, all these concepts are not specific to LUIS.Most of the language understanding engines out there e.g. DialogFlow (from google), Wit.ai (from facebook) etc all work on the same concept of intents, entities, and utterances.

So if you understand one you can probably learn others very quickly.

LUIS is part of much bigger machine learning based AI framework called Microsoft cognitive services. Which includes libraries for vision, knowledge inference, speech, text etc. To get a high-level overview of cognitive services you can check out this pluralsight course.